Problems 1-6: Pipelining

Problems 1 and 2 assume that instructions for a given program, executed by a pipelined processor, are broken down as follows:

| ADD | BEQ | LW | SW | |

| a. | 40% | 30% | 25% | 5% |

| b. | 60% | 10% | 20% | 10% |

1. Assuming there are no stalls and that 60% of conditional branches are taken, in what percentage of clock cycles does the BEQ actually cause execution to branch?

For a): CBEQ = 0.30*Ctotal ; BEQ instruction cycles comprise 30% of all instruction cycles, since each instruction takes the same number of cycles

CBEQ= CIF+ CID+ CALU+ CMEM+ CWB ; The number of cycles consumed by all BEQ instructions is the sum of the cycles consumed by a BEQ instruction's 5 stages: IF, ID, ALU, MEM, and WB.

The number of cycles per stage is the same, thus for 5 stages, each stage takes 20% of the number of cycles consumed by BEQ instructions:

CIF = CID = CWB = CMEM = CALU = 0.20*CBEQ = 0.20*(0.30*Ctotal) = 0.06*Ctotal ; the number of cycles used by each stage of a BEQ instruction is 20% of 30% of the total cycles, so 6%.

Of the 6% of total cycles used by the the ALU stage of a BEQ instruction, 60%

of those are where the conditional branch is taken. So 60% of 6%, or 3.6%, is

the percentage of total cycles where the ALU stage of BEQ causes execution to

branch

For b), similar analysis gives an answer of 1.2%

2. Assuming there are no stalls, in what percentage of all cycles are needed

to access data memory?

For a), memory is accessed by both LW and SW instructions, so 30% of all

instructions access memory. The MEM stage consumes 20% of the cycles for any LW

or SW instruction, so 20% of 30%, or 6% of all cycles access memory.

For b), the total percentage of LW and SW instructions is still 30%, so 6% of all cycles access memory.

Problems 3-5 assume a processor with datapath consisting of the following stages and stage latencies:

| IF | ID | EX | MEM | WB | |

| a. | 200ps | 120ps | 150ps | 190ps | 100ps |

| b. | 150ps | 200ps | 200ps | 200ps | 100ps |

3. In the absence of pipelining, what is the cycle time for a single instruction?

For a): With no pipelining, each instruction simply takes the sum of all

individual stages, so 200+120+150+190+100=760ps. The clock only has to "tick"

once per instruction, so the clock cycle time is 760ps.

For b): 150+200+200+200+100=850ps.

4. What is the cycle time for a single stage, if this same datapath were to be pipelined?

For a): With pipelining, the clock "ticks" each stage. Since a clock's ticks must be uniform, the "tick" time must be adjusted to accommodate the longest stage (200ps), and any shorter stages must be "stretched" to fit. Thus, all 5 stages take 200ps each, which is the cycle time for each stage.

For b): The longest stage is also 200ps, so the cycle time per stage is also 200ps as in a).

5. Assuming no stalls, what is the speedup achieved by pipelining these stages (once the pipeline is full and maximum throughput achieved)?

For a): Once the pipeline is full, an instruction completes every 200ps, vs every 760ps for an non-pipelined approach. Thus the speedup is 760ps/200ps = 3.8 (380%).

For b): The speedup is 850ps/200ps = 4.25 (425%).

6. Assume the ID stage stalls 25% of the time. What is the effective CPI?

If the ID stage NEVER stalled, the CPI would be 1, since every cycle, another

instruction would complete.

If the ID stage ALWAYS stalled, the CPI would be 2, since every 2 cycles,

another instruction would complete.

25% of the time, the CPI is 2, and for the other 75% of the time, the CPI is 1.

Thus the average CPI is 0.25*2 + 0.75 = 1.25

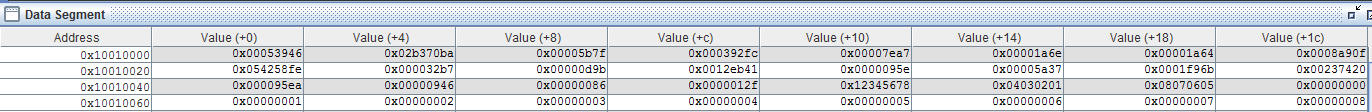

Problem 7: Cache Design

|

Set

Index |

Set (1 bit) |

Dirty |

Valid |

Tag (20 bits) |

Data |

|

00 |

1 |

1 |

1 |

0x10010 |

0x00053946

0x02b370ba

0x00005b7f

0x000392fc

|

|

0 |

0 |

0 |

0x00000 |

0x00000000 0x00000000 0x00000000 0x00000000 |

|

|

01 |

0 |

0 |

0 |

0x00000 |

0x00000000 0x00000000 0x00000000 0x00000000 |

|

0 |

0 |

0 |

0x00000 |

0x00000000 0x00000000 0x00000000 0x00000000 |

|

|

02 |

1 |

1 |

1 |

0x10010 |

0x054258fe 0x000032b7 0x00000d9b 0x0012eb41 0x054258fe 0x000032b7 0x054258fe 0x0012eb41 |

|

0 |

0 |

0 |

0x00000 |

0x00000000 0x00000000 0x00000000 0x00000000 |

|

|

03 |

0 |

0 |

0 |

0x00000 |

0x00000000 0x00000000 0x00000000 0x00000000 |

|

0 |

0 |

0 |

0x00000 |

0x00000000 0x00000000 0x00000000 0x00000000 |

|

|

04 |

1 |

1 |

1 |

0x10010 |

0x000095ea 0x00000946 0x00000086 0x0000012f 0x000095ea 0x00000946 0x00000086 0x0000012f |

|

0 |

0 |

0 |

0x00000 |

0x00000000 0x00000000 0x00000000 0x00000000 |

|

|

05 |

0 |

0 |

0 |

0x00000 |

0x00000000 0x00000000 0x00000000 0x00000000 |

|

0 |

0 |

0 |

0x00000 |

0x00000000 0x00000000 0x00000000 0x00000000 |

|

|

06 |

1 |

0 |

1 |

0x10010 |

0x00000001 0x00000002 0x00000003 0x00000004 |

|

0 |

0 |

0 |

0x00000 |

0x00000000 0x00000000 0x00000000 0x00000000 |

|

|

… |

… |

… |

… |

… |

… |

|

FF |

0 |

0 |

0 |

0x00000 |

0x00000000 0x00000000 0x00000000 0x00000000 |

|

0 |

0 |

0 |

0x00000 |

0x00000000 0x00000000 0x00000000 0x00000000 |

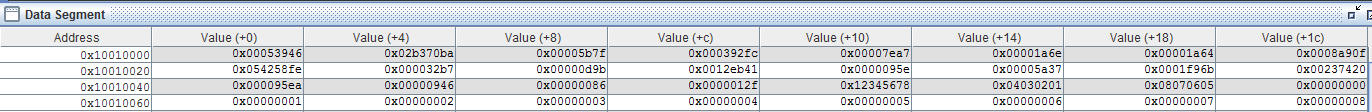

For a 2-way set-associative cache model (above), determine the content of the cache after each of the following instructions are executed (note that you may have to replicate and expand the table to include additional set indices)

lw $t0, ($t1) #

t1=0x1001 0000, after execution, t0=0x00053946

sw $t0, 4($t1) # the value

0x00053946 is stored to 0x10010004

lw $t0, ($t2) # t2=0x1001 0020, after

execution, t0=0x054258fe

sw $t0, 8($t2) # the value

0x054258fe is stored to 0x10010028

lw $t0, ($t1) # t1=0x1001 0044, after

execution, t0=0x00000946

sw $t0, ($t1) # the value is written

back to the same address

lw $t0, ($t1) # t1=0x1001 0060, after execution,

t0=0x00000001